Real-Time Generative Art: A Guide to StreamDiffusion and TouchDesigner

StreamDiffusion is a cutting-edge, real-time diffusion pipeline that is revolutionizing the creation of interactive and generative visual content. This open-source technology offers significant performance advantages over traditional diffusion-based systems, making it a powerful tool for artists, developers, and creators. When combined with platforms like TouchDesigner, StreamDiffusion opens up a new realm of possibilities for real-time video generation, interactive installations, and immersive experiences.

Key Features of StreamDiffusion

At its core, StreamDiffusion is designed for speed and efficiency. Its key technical features include:

- Stream Batch: A novel approach to denoising that processes data in a continuous stream, enabling real-time generation.

- Residual Classifier-Free Guidance: A technique that reduces computational costs without sacrificing output quality.

- Stochastic Similarity Filter: A feature that improves GPU efficiency by filtering out unnecessary computations.

- Input-Output Queue: A system that parallelizes the streaming process for smoother and faster performance.

- Model Acceleration Tools: Various tools and techniques to accelerate the performance of the diffusion models.

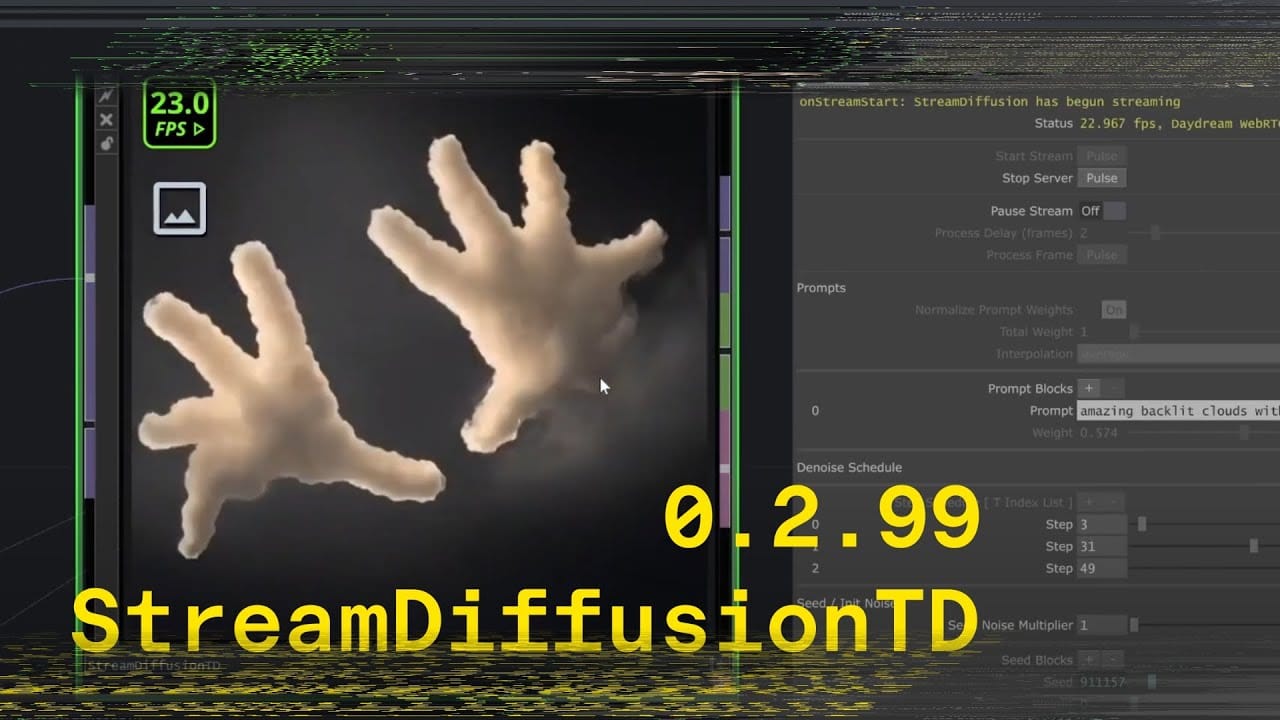

Integrating StreamDiffusion with TouchDesigner: StreamDiffusionTD

While StreamDiffusion is a powerful Python-based tool, its integration into visual development platforms like TouchDesigner can be complex. To simplify this process, Lyell Hintz aka @DotSimulate created StreamDiffusionTD, a TouchDesigner operator that encapsulates all of StreamDiffusion's features into a single, user-friendly component.

StreamDiffusionTD connects real-time inputs, such as audio, sensors, and camera feeds, to the StreamDiffusion pipeline, allowing for the creation of live visuals that can be manipulated in real time. The operator is designed to be transparent, exposing core parameters to give users immediate feedback and control over the creative process.

Daydream API Integration and Enhanced Features

A key feature of StreamDiffusionTD is its integration with the Daydream API, which enables remote GPU inference. This means users do not need a high-end local GPU to run StreamDiffusion, making the technology more accessible to a wider range of creators. The Daydream API integration also adds several advanced features, including:

- Multi-ControlNet: The ability to use multiple ControlNet models simultaneously for more complex and detailed visual control.

- IPAdapter: A feature that allows users to use images as style guides for the generated visuals.

- TensorRT: A high-performance deep learning inference optimizer that significantly improves frame rates.

Installation and Requirements

To get started with StreamDiffusionTD, you will need the following:

- Operating System: Windows 10 or 11

- Graphics Card: An NVIDIA graphics card with CUDA support

- Software:

- Python 3.10.9

- CUDA Toolkit 11.8 or 12.2

- Git

- Hugging Face ID

The installation process involves downloading the StreamDiffusion repository, installing its dependencies, and optionally installing the TensorRT SDK for NVIDIA GPUs. Once the prerequisites are met, you can drag the StreamDiffusionTD tox file into your TouchDesigner project to get started.

Using StreamDiffusionTD

StreamDiffusionTD offers three main functionalities:

- Text-to-Image: Generate images from text prompts.

- Image-to-Image: Transform existing images into new creations.

- Video-to-Video: Apply real-time effects and transformations to video footage.

By adjusting the various parameters within the StreamDiffusionTD component, users can create a wide range of unique and dynamic visuals, from audio-reactive concert visuals to camera-driven generative art for large-scale displays. The operator can also be extended and customized using Python, MIDI, OSC, and other inputs supported by TouchDesigner.